A Chatbot Warning System Against Online Predators

November 18, 2020

Protecting Children

Today, children face an evolving threat — online violence. Violence and harassment of children have been growing exponentially for more than 20 years but due to the recent events leading to the closing of schools for over 1 billion children around the world, children are more vulnerable than ever. Online predators use Internet avenues popular with children and adolescents such as game chat rooms to lure them into sexual exploitation and even in-person assaults.

Protection against online sexual violence greatly varies from platform to platform. Some gaming platforms include a profanity filter that looks for problematic words and replaces them with a string of asterisks. Outside the gaming platforms, many chat platforms still do not have any safeguards in place to protect children from predatory adult conversations. However, chat logs can provide information on how a predator might attempt to exploit children and young adults into risky situations, such as sharing photos, web cameras, and sexting (sexual texting).

Often, pattern recognition techniques provide an automated identification of these conversations for potential law enforcement intervention.

Unfortunately, this strategy uses many man-hours and spans many message categories, which makes it all the more difficult to identify these patterns. It is a challenging task, but one that is worth tackling, and we elaborate on our approach in the rest of the article.

STEPHEN ORSILLO/SHUTTERSTOCK

First, let us establish our working definitions.

- The New Oxford American Dictionary defines a predator as a “person or group that ruthlessly exploits others.”

- Expanding the term to a sexual predator as “a person seen as obtaining or trying to obtain sexual contact or favor with another person in a metaphorically ’predatory’ manner”, Daniel M. Filler, Virginia Journal of Social Policy & the Law (2003).

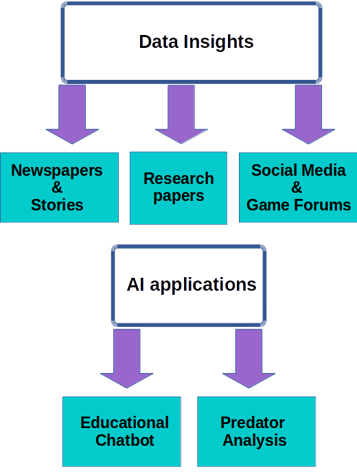

The Solution — Data Engineers Unite!

The team focused on the solution’s Predator Analysis portion.

Our solution looked to reduce man-hours and to develop a near real-time warning system to the chat that alerted the child when the conversation changes sentiment. The team used a semi-supervised approach to evaluate if the conversations provide a low, medium, or high risk to the child. The system would evaluate the phrase or sentence and return an effective sentiment warning if warranted. The data for our chatbot (Predator-Pseudo-Victim conversations) was collected from interactions between a predator and a law enforcement officer or volunteer posing as a child.

The chatbot was designed to learn from non-predatory and predatory conversations and distinguish between them. Additionally, it would have the ability to recognize inappropriate messages no matter whether they came from the predator or the child’s side. The corpus also had adult-like conversations initiated from the child’s side.

The Dataset

The team consolidated and cleaned nearly 500 chat log files that contained exchanges between a predator and a pseudo-victim. The collection grew into a corpus containing 807,000-plus messages ranging from “hello” to explicit remarks. The dataset creation proved laborious, where I voluntarily provided more than 630 hours in just labeling data. The dataset received labels, such as male or female as they identified themselves in the chats, predator or victim, and level of risk of the conversation. Nearly half of the project time was dedicated to a properly built and parsed dataset.

This dataset was split into a training, development, and test set. The training set held 75 percent of all messages for the chatbot to learn the contextual format and nuances of conversation. The development set, which was 10 percent of the data, was held away from the chatbot until after model selection, to prove the validity of the model.

The 2 mins video quickly discusses how the team assembled the chatbot’s dataset.

Data Format and Storage

The data was housed in a relational database. It became large enough to serve as a nexus to provide uniquely formatted datasets for the machine learning pipeline.

During the labeling process, few issues arose on how to semantically define a conversation. With many different log formats, ranging from AOL Messenger to SMS and other online platforms, the sentences would start and stop at different points. In conversations, I implemented a similar format as used in the competitively-used Cornell University’s movie corpus that provided a standard structure making it easy to parse the data. Additionally, the corpus contained chat slang, abbreviations, and number-for-words, like “l8r” for “later” and “b4” for “before”, which required a team consensus on how to handle these stopwords. The team did not focus on timestamps due to extremely varied formatting, missing values, and lack of importance to the overall project.

The Model

Many models presented as candidates to the chatbot’s internal workings. The main goal for the team was to have a local and offline solution for now. This was done to reduce privacy concerns and legal issues. Future considerations of this project would evaluate these features with appropriate development operations.

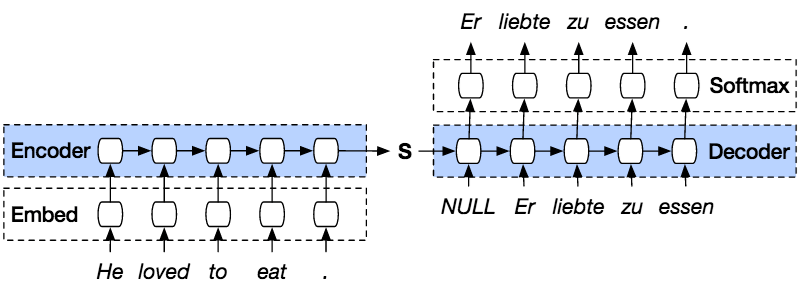

Basic sequence-to-sequence model diagram.

The selected model focused around a Long Short Term Memory (LSTM) network cell, arranged as a sequence-to-sequence configuration. LSTMs have long been proved well-suited to work with sequential data. Our application would use this ability to help the chatbot predict the next plausible word to use for response.

For the sentiment analysis portion, we focused our efforts on an ensemble learning model as well as a support vector machine to help predict when the conversation changed from benign to risky.

Conclusion

Our team successfully built a chatbot and a sentiment analysis model independently. The chatbot learned from its more than 807,000 messages to understand how to parse sentences and structure a proper response. The limited vernacular stemmed from the chatbot’s time to learn and framework limitations.

The greatest challenge to code performance-centered inside the platform chosen, TensorFlow 1.0.0, provided limitations. The code did provide a conversation-capable entity, but the model needs more training data if we want to go beyond proof-of-concept to deploy it in an application.

The project successfully employed message sentiment analysis and was able to warn the user of potentially risky conversations initiated by online predators. The sentiment analysis ranged from low, medium, or high levels of risk.

Future considerations will take this project into a full-functioning environment of TensorFlow 2.1.0, eliminating other frameworks, including PyTorch. The internal model will receive an update to the LSTM structure and performance will be improved with the use of graphics computing processors, such as NVIDIA and its cuDNN framework.

—

This article is written by Jeremy Wood and Guneet Singh Kohli.

Related Articles