Satellite Image Analysis to Identify Trees and Prevent Fires

How to Build a High-Impact Deep Learning Model for Tree Identification with more than 95 percent accuracy to prevent forest fire outbursts.

April 26, 2020

8 minutes read

Four weeks ago 35 AI experts and data scientists, from 16 countries came together through the Omdena platform. The community participants formed self-organized task groups and each task group either picked up a part or approach to solving the challenge.

Forming the Task Groups

Omdena’s platform is a self-organized learning environment and after the first kick-off call, the collaborators started to organize in task groups. Below are screenshots of some of the discussions that took place in the first days of the project.

Task Group 1: Labeling

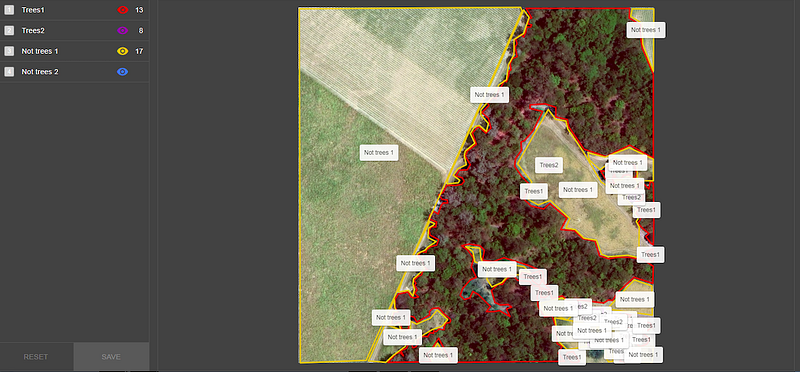

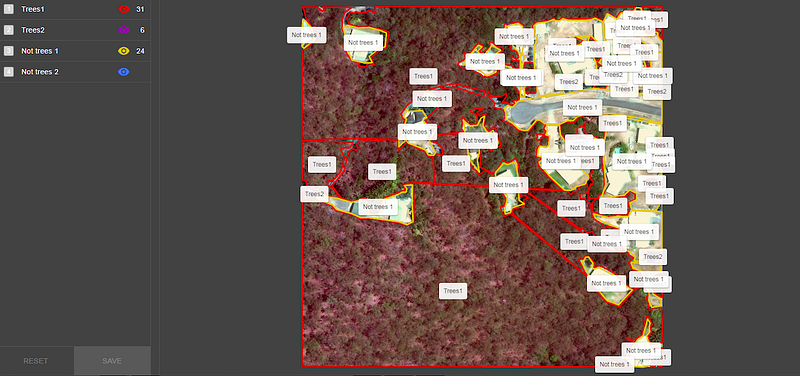

We labeled over 1000 images. A large group of people makes it not only faster but also more accurate through our peer-to-peer review process.

Active collaborators: Leonardo Sanchez (Task Manager, Brazil), Arafat Bin Hossain (Bangladesh), Sim Keng Ying(Singapore), Alejandro Bautista Ramos (Mexico), Santosh Kumar Pydipalli (India), Gerardo Duran (Mexico), Annie Tran (USA), Steven Parra Giraldo (Colombia), Bishwa Karki (Nepal), Isaac Rodríguez Bribiesca (Mexico).

Labeled images

Task Group 2: Generating images through GANs

Given a training set, GANs can be used to generate new data with the same features as the training set.

Active Participants: Santiago Hincapie-Potes (Task Manager, Colombia), Amit Singh (Task Manager for DCGAN, India), Ramon Ontiveros (Mexico), Steven Parra Giraldo (Colombia), Isaac Rodríguez (Mexico), Rafael Villca (Bolivia), Bishwa Karki (Nepal).

Output from GAN

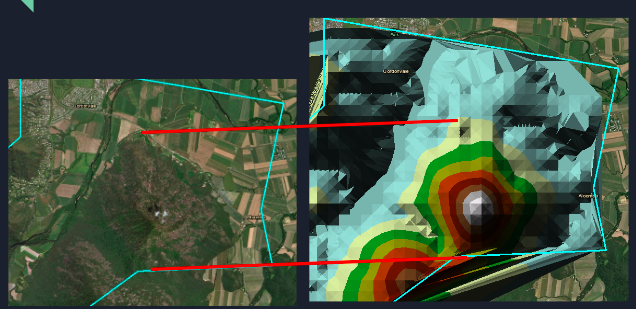

Task Group 3: Generating elevation model

The task group is using a Digital Elevation Model and triangulated irregular network. Knowing the elevation of the land as well as trees will help us to assess risk potential tree posses to overhead cables.

Active Participants: Gabriel Garcia Ojeda (Mexico)

Task Group 4: Sharpening the images

A set of image processes has been built, different combinations of filters were used and a basic pipeline to automate the process was implemented to test out the combinations. All in order to preprocess the set of labeled images to achieve more accurate results with the AI models.

Active Participants: Lukasz Kaczmarek (Task Manager, Poland) Cristian Vargas (Mexico), Rodolfo Ferro (Mexico), Ramon Ontiveros (Mexico).

Output after sharpening

Task Group 5: Detecting trees through Masked R-CNN model

Mask R-CNN was built by the Facebook AI research team. The model generates a set of bounding boxes that possibly contain the trees. The second step is to color based on certainty.

Active Participants: Kathelyn Zelaya (Task Manager, USA), Annie Tran (USA), Shubhajit Das (India), Shafie Mukhre (USA).

Masked RCNN output

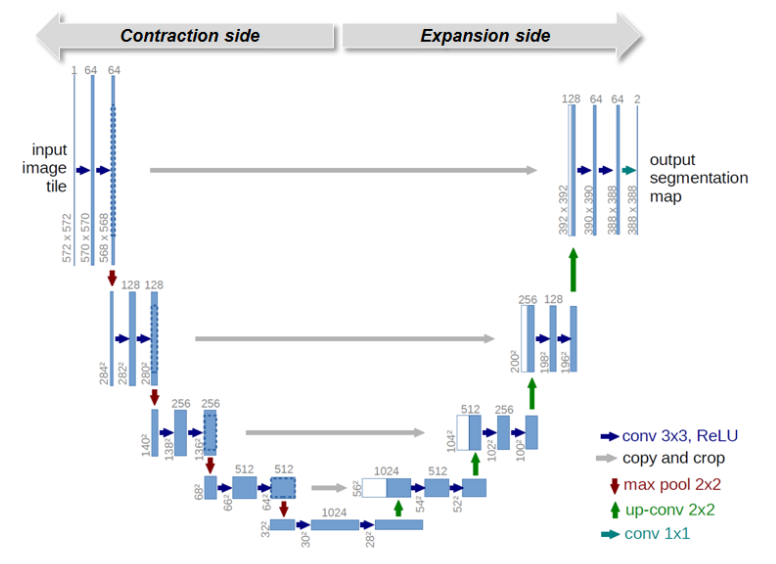

Task Group 6: Detecting trees through U-Net and Deep U-Net model

U-Net was initially used for biomedical image segmentation, but because of the good results it was able to achieve, U-Net is being applied in a variety of other tasks. It is one of the best network architecture for image segmentation. We applied the same architecture to identifying trees and got very encouraging results, even when trained with less than 50 images.

Active Participants: Pawel Pisarski (Task Manager, Canada), Arafat Bin Hossain (Bangladesh), Rodolfo Ferro (Mexico), Juan Manuel Ciro Torre (Colombia), Leonardo Sanchez (Brazil).

The U-Net consists of a contracting path and an expansive path, which gives it the u-shaped format. The contracting path is a typical convolutional network that consists of repeated application of convolutions, each followed by a rectified linear unit (ReLU) and a max-pooling operation.

U-Net architecture

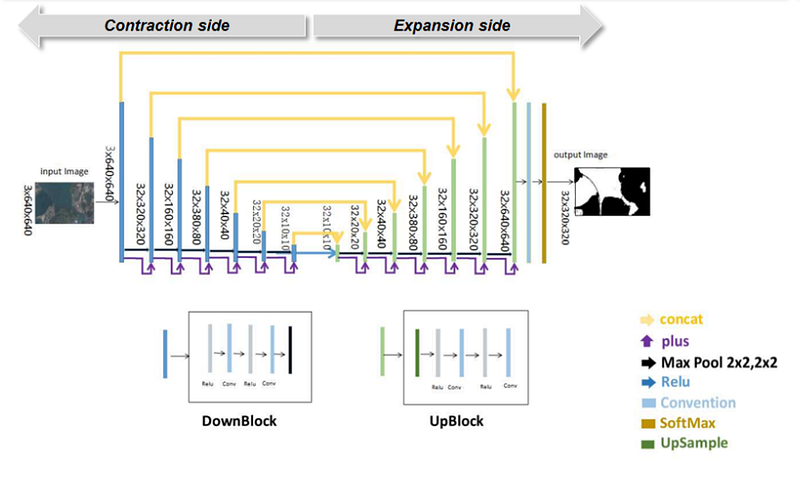

One of the techniques called our attention: the Deep U-Net. Similarly to U-Nets, the Deep U-Nets have both sides (contraction and expansion side) but use U-connections to pass high-resolution features from the contraction side to the upsampled outputs. And additionally, it uses Plus connections to better extract information with less loss error.

Deep-U-Net Architecture

Deep-U-Net Architecture

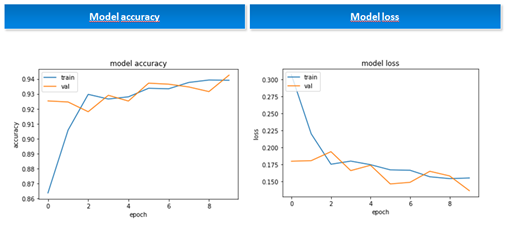

Having discussed the architecture, a basic Deep U-Net solution was applied to the unique 144 images labeled that were then divided into 119 images and 119 masks for the training set, 22 images and 22 masks for the validation set, and 3 images and 3 masks for a test set. As images and masks were in 1,000 x 1,000 images, they were cropped into 512 x 512 images generating 476 images and 476 masks for the training set, 88 images and 88 masks for the validation set, and 12 images and 12 masks for the test set. Applying the Deep U-Net model with 10 epochs and a batch size equal to 4, the results for the 10 epochs — using Adam optimizer, a binary-cross-entropy loss and running over a GPU Geforce GTX 1060 — were quite encouraging, reaching 94% accuracy over validation.

Model Accuracy and Loss

Model Accuracy and Loss

Believing that accuracy could be improved a bit further, the basic solution was expanded using data augmentation. We generated through rotations, 8 augmented images per original image and had 3,808 images and 3,808 masks for the training set, and 704 images and 704 masks for the validation set.

We reproduced the previous model, keeping the basal learning rate as 0.001 but adjusting with a decay inversely proportional to the number of epochs and increasing the number of epochs to 100.

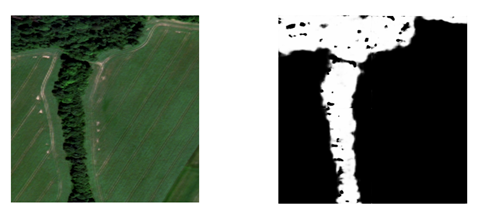

The Deep U-Net model learned very well to distinguish trees in new images, even separating shadows among forests as not trees, reproducing what we humans did during the labeling process but with an even better performance.

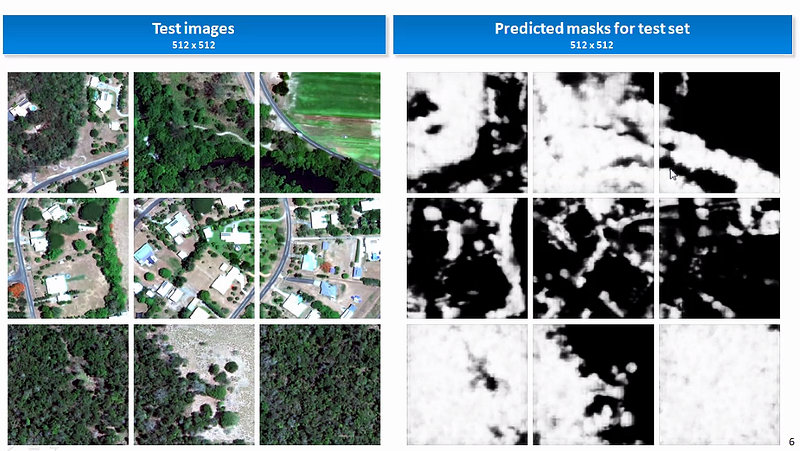

A few results can be seen below and were generated using new images completely unseen before by the Deep U-Net.

Lithuania image (the model was trained on Australia with a different landscape)

Predictions over the test set

You might also like

- Terrorist Knowledge Graph to Unveil Terrorist Organizations

- Enhancing Satellite Imagery through Deep Learning

- Applying Satellite Imagery for Water Quality Monitoring in Kutch Region

- Satellite Imagery Analysis and Heatmaps to Identify the Best Areas for Solar Energy in Africa

- Enhancing Satellite Imagery Through Super Resolution