Is the Turing Test Still Relevant?

What does it mean to be an Intelligent Machine? The turing test has several limitations that requires us to rethink how we measure intelligence.

December 30, 2020

10 minutes read

Or what does it mean to be an Intelligent Machine?

By Irune Lansorena, Colton Magnant, David Gamba, and Alexis Carrillo Ramirez

The ever-increasing interest in a human-machine society is beyond question. And this is not only for AI practitioners anymore: AI is, more than ever, multidisciplinary. Many experts from extremely diverse areas (anthropology, psychology, neuroscience, cognitive science, sociology) are asking questions from different perspectives. But there is one type of driving question that brings them all together: what exactly is ‘intelligence’? Can an agent show ‘general’ intelligence? Is there a unique and universal intelligence? These questions lead many of us to ‘wonder’ the real relevance of well-known assessment mechanisms: in particular the Turing Test.

But wait, what’s the Turing Test about?

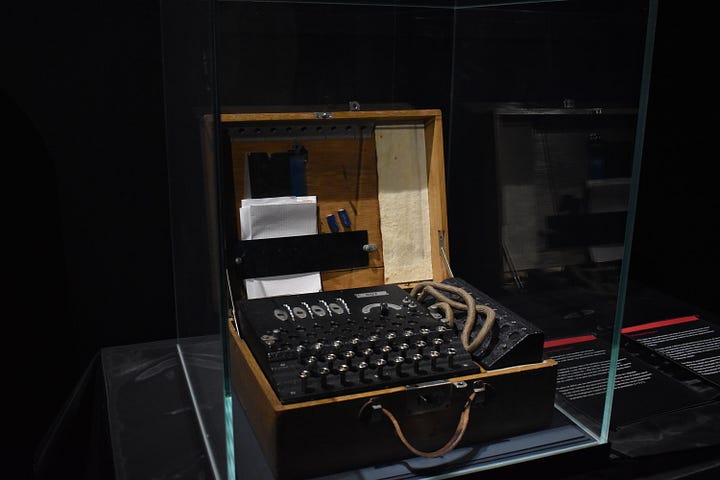

Alan Turning devised a straightforward test to evaluate intelligent machines. According to the test, if a human evaluator cannot differentiate between natural language coming from a human and from a machine, then the machine has passed.

In this article, we explore the idea of an AI passing the Turing Test (or a variant) and whether the test is still relevant or if we need a new criterion.

We show three perspectives about the Turing Test and the implications of machines being successful on it.

First, we discuss intelligence from the cognitive science (mostly human) position. Second, we discuss the repercussions from a social researcher’s perspective. Finally, we look at it from a machine learning (ML) expert and AGI (Artificial General Intelligence) perspective.

What does Cognitive Science have to say?

In the Wikipedia article about Turing’s original article, there is a paragraph that clearly explains the dynamics of the test:

As Stevan Harnad notes, the question has become “Can machines do what we (as thinking entities) can do?” In other words, Turing is no longer asking whether a machine can “think”; he is asking whether a machine can act indistinguishably from the way a thinker acts. This question avoids the difficult philosophical problem of pre-defining the verb “to think” and focuses instead on the performance capacities that being able to think makes possible, and how a causal system can generate them.

Given that, the nature of Turing’s test is more related to an emulator (or imitator) instead of a replicator of intelligence. This means that the important thing is the output, not the process in-between. The development of Machine Learning algorithms is based on this fundamental idea: The intention is not replicating, but rather imitating complex behaviors. For example, an Artificial Neuron (AN) takes the idea of Biological Neurons (BN) of receiving some inputs, combining them, and giving an output. While BN achieve this via electrochemical processes, AN act via matrix algebra. Ultimately, the output is very similar, but the means involved in the process are completely different.

The idea of defining intelligence as something that a machine can leverage drives us to the psychological (cognitive science) perspective about intelligence. All the psychological constructs that (cognitive) psychology investigates are defined in terms of observable behavior, and this includes intelligence. Assessing intelligence means that you count several actions that a person is capable of doing which are considered as the things that an intelligent person can do. However, there is no consensus about which are those actions that denote intelligence.

General intelligence = human intelligence

Advancements in psychometrics gave rise to the concept of intelligence as a unique entity that accounts for high-level performance in several abilities testing, known as general intelligence, which is the mainstream model for understanding human intelligence. Psychometricians as Charles Spearman developed mathematical formulations that support those measures: the g factor. Psychology still lacks a solid word about the definition of intelligence, despite all the research; this is a field in current development. If we cannot have a clear understanding nor definition of (human) intelligence, the definitions and implications of (non-human) artificial intelligence can only be less clear. If we take into account the fact that our reference state (aka Turing Test) is mainly based on assessing a ‘human-like’ intelligent behavior of an agent (machine), we can ask ourselves if this test has a solid foundation based on a still ‘unexplained’ concept: our own intelligence.

Is the Turing Test still relevant?

Photo by Mauro Sbicego on Unsplash

The Turing Test has been the reference for assessing a virtual world with indistinguishable human-machine interactions. From the beginning of AI, we have been assuming that passing the Turing test should be enough to ‘validate’ the genuine intelligence of an agent. There are, however, a lot of potential objections against the Turing test. We already put on the table one of them:

Limitation 1: No clear understanding of human intelligence

However, one can state that the Turing Test is able to simulate an environment in which the machine shows this ‘human-like’ intelligence without any deep formalism of what intelligence means, right? So, let us look at some additional limitations of the current Turing Test.

Limitation 2: Does not capture general intelligence

Current AI systems have been successful in showing intelligence in several tasks (healthcare diagnosis, computer vision, speech recognition, face recognition, NLU, financial analysis, etc.). AI has done this in an impressive and very effective way (sometimes even outperforming human thresholds). However, very few (if not almost none) of them were able to show ‘human-level’ intelligence. Let’s take a minute to think about us humans. We are able to solve (almost) any kind of problem and our intelligence is not restricted to concrete automation or specific task problems.

In simple words, we are not constrained to some specific tasks (apart from our previous knowledge, but always there is an improvement opportunity there, using our intelligence indeed).

We show a general intelligence for tackling our daily problems: regardless of the nature of the task and previous experience we are able to find a path that leads us to a solution (sometimes more close to our desired outcome than others, that’s human life).

Limitation 3: Measures only conversational intelligence

Well, here goes another limitation of Turing test-based assessments (at least until now!). The Turing test focuses on conversational capabilities measurement (which as you may be already imagining…our human intelligence encompasses many other reasoning abilities than verbal reasoning such as numerical, logical, and sometimes underrated one: emotional intelligence. Emotional intelligence is our best ‘weapon’ for a successful coexistence in our society. Will it be extendable to machines also? Will it be a key for a fruitful coexistence in a ‘hybrid’ society in which humans and machines can interact at the same level? Maybe we should start thinking about how this ‘coexistence’ would be in terms of rights and obligations and current regulatory (or lack of regulatory).

Biggest successes?

Source: Wikimedia Commons

Meanwhile, how far we are from this future ‘hybrid’ society remains controversial, but several well-established companies like Google have been noted for ‘beating’ the Turing Test. Let’s remember together the boom with Google Duplex or Eugent Goostman. AlphaGo from Deepmind was another big win towards a ‘more intelligent’ AI. But, can we ever say the machine from AlphaGo could ‘understand’ what the intuition behind the game was? Plus the case of AlphaGo was a little bit tricky. Again, an AI was able to beat human performance just in a concrete task: playing a game. IBM Watson won in the battle human vs machine in Jeopardy! and made us closer to the dream of general intelligence: Watson was able to win in a real-time game competition and give answers according to the rules of the game. However, we do not live in a game or a simulated environment with its defined rules, do we?

Our world is much more complex than that, so having a test working under these strong assumptions in terms of representativeness of the real-world environment seems a little bit naive. A machine will have to deal with much more complex tasks if it/he/she is ever introduced to our system (society): both individually and also as a social member.

But let’s take the Turing Test as a valid ‘proof’ of ‘human-like’ individual intelligence. What if an agent is able to surpass the Turing test? Should this be enough to ‘validate’ the agent? Or should we need an ‘ethical’ (Moral) Turing test before deploying it to ‘our world’?

We, humans, are by nature social animals. If we look at AI as a new element (stakeholder) under the social (equation), things get even a little bit more complicated. Many sociologists are concerned about the current reality: we lack mechanisms to ‘emulate’ or at least simulate a real-world environment in which we could ‘watch/observe’ the behavior of any non-soft AI agent before introducing it to our real society. Maybe the question would not be any more how we should evaluate machines’ performance (especially with an individualistic perspective), but let them be ‘in the wild’ and find new mechanisms for a smoother transition.

The Turing Test relies on conversational intelligence to determine the capacities of the machine. Experts believe that we developed language due to our social nature. We use language to encode our experiences and express ourselves, even in our internal monologues. It might be the case that language is not required for intelligence, the same way octopuses did not require a society to form intelligence.

Machines, so different from us, might express completely different ways of intelligence.

Thus the anthropocentric nature of the Turing test might make us look for a mirror of our own intelligence, where we might not perceive something different, something unique.

So, is the Turing Test obsolete?

Thus, it seems certain to wonder if the Test is getting obsolete or still reflects a valid measure of intelligence. It depends if you link intelligence to language. If so, this means that a machine capable of AGI must be conversational? Or if not, how can a machine prove their ”General Intelligence” without talking? These questions lead us to explore the possibilities of different tests for AGI.

Photo by GR Stocks on Unsplash

AI is capable of many things and the list of areas where AI can exceed even human capabilities continuously grows. On the other hand, AI systems are still created by people and are limited in scope to their training data (perhaps extended by that which can be generated within given parameters).

In order to discuss the potential for AI to become “more human” than a human, we must first consider what being human means to us. If we define humanity by the ability to win a match of Jeopardy, defeat another pilot in air combat, or beat the grandmasters of Go or Chess, then it seems AI already has the upper hand but true humanity is much more complicated.

We live in a continuous ubiquity of “narrow” AI. We do not stop to think it through, maybe because of the rush of life or just because we see it as a part of our normality, but one thing is clear: AI is already part of our daily life. We have embedded AI in our TV-s, GPS-s apps, mobile phones, or other IoT solutions as virtual assistants (Alexa, Siri, Google Assistant); and of course, can’t imagine ourselves consuming a leisure product without first going into some popular recommendation systems like Netflix or Amazon.

The situation is evolving even faster because of our current urgent needs due to COVID-19. COVID is pushing our society (sadly or not) to make the most of current tech solutions, including many AI-centered solutions that enable us to give a quick and less risky answer to human needs (remote assistance). AI has been a key factor for fighting and controlling the pandemic in many aspects (knowledge and findings sharing, urban mobility impacts assessment, policy-making), but especially in assisting an overwhelmed healthcare (remote service chatbots, cutting-edge robotic hospitals in China, robots for rapid and low-risk disinfection of radiology treatment rooms).

In humans, the sense of kinship and belonging to a community is sometimes so strong that we may see slightly different persons as completely different from us. In extreme cases, this may give rise to aggressive tribalism, notions of one’s group’s superiority, and discrimination. We’ve seen it (and unfortunately continue to see it) with people of a different color, from different countries, and of different religions. Even with artificial differences, this influence is powerful, such as the systems imposed by colonialism on Africa only to divide the established communities. To counter this effect, a portion of the current discourse on equality hinges on the unspoken notion that while we all might express ourselves differently, at our core we are the same and deserve equal rights and respect.

However, what do we do when facing a being so clearly different from us that the argument no longer holds? This is one of the key questions that general AI passing the Turing test would bring up.