Detecting Anomalies in Marsian Satellite Images Through Object Detection

Anomaly, contour and shape detection in Mars surfaces using data labeling and object detection algorithms caused by non-terrestrial artifacts on Mars.

The Problem

The objective of this project was to detect anomalies on the martian (MARS) surface caused by non-terrestrial artifacts like derbies of MARS lander missions, rovers, etc.

What is an anomaly?

Something that deviates from what is standard, normal, or expected. Basically, anything that should not be there.

Standard life cycle

Data Collection → Exploratory Data Analysis (EDA) → Data Labeling → Data Pre-processing → Model → Evaluation

Data Collection

We at Omdena created an API for collecting data from Data Sources that made the work much simpler.

But you can also collect data from HiRise ,we made a scraper for that if anyone needs that let me know in the response section.

The images are given here.

EDA and Data Labeling

I want to focus on this part more than anything.

The idea here is simple the dataset has thousands of data points (images) and as you can see they are unannotated and unlabeled. Our main focus was to use object detection or segmentation algorithms to detect anomalies and contours on the surface of Mars. For any object detection algorithm like YOLO, it is absolutely necessary to annotate the images, in our case, images of Mars.

Selecting the object of interest and saving the coordinated to an XML file or text file (depending on the version you are using) using labeling tools

Why Unsupervised?

Well it’s simple, it is a tedious work to do, I mean going through thousands of images and selecting the region of interest, labeling it. I am all hands down for it.

So what now?

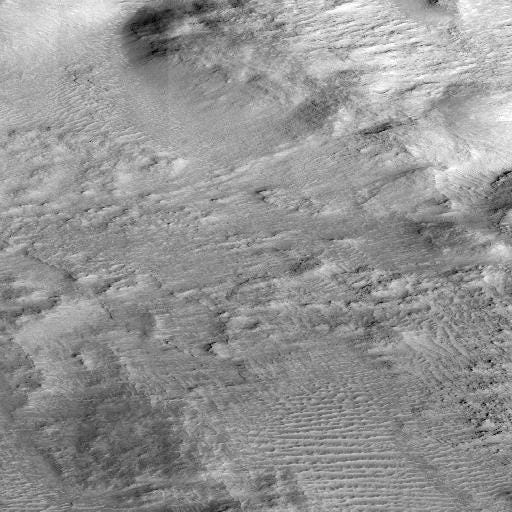

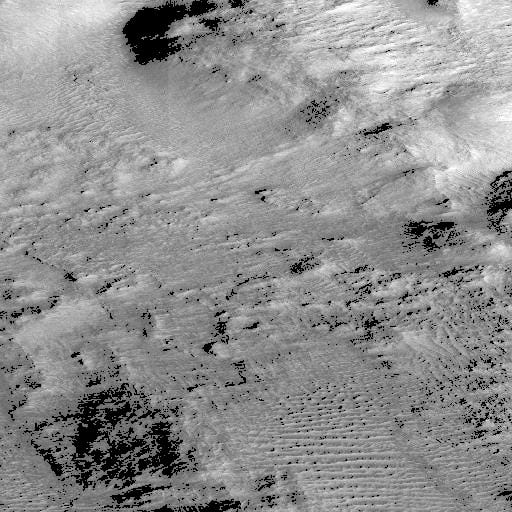

Let’s take this for example

This is a sample image taken from dataset API. Now there are multiple objects here craters, hills, and dunes. Our objective is to detect those without any manual intervention.

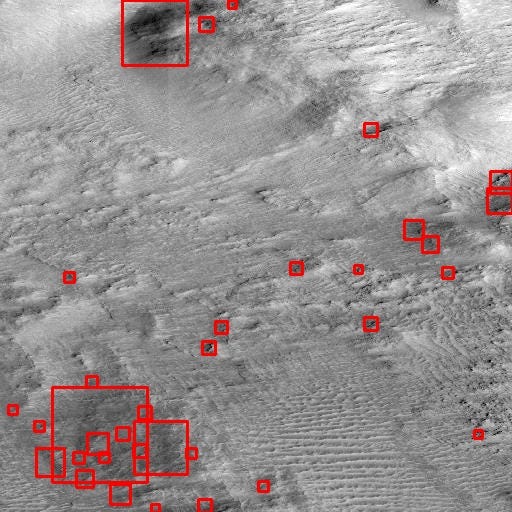

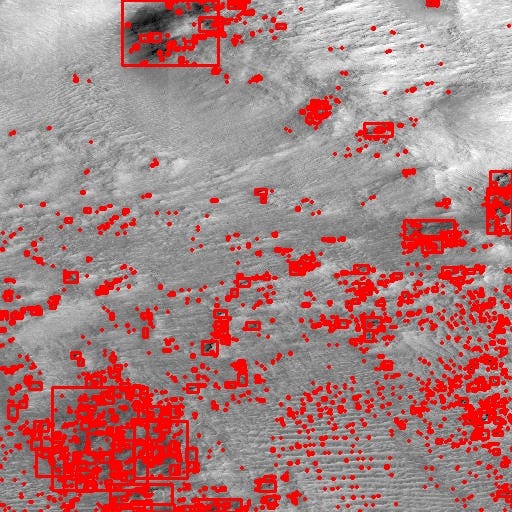

This is an example output

Requirements:

- Python

- OpenCV

- XML

- PIL

Steps:

- Sharpening

- Thresholding

- Contour Detection

- XML parsing

Sharpening

Sharpening an image increases the contrast between bright and dark regions to bring out features. The sharpening process is basically the application of a high pass filter to an image. In a basic sense, sharpening brings out latent features from an image from a wide feature Space.

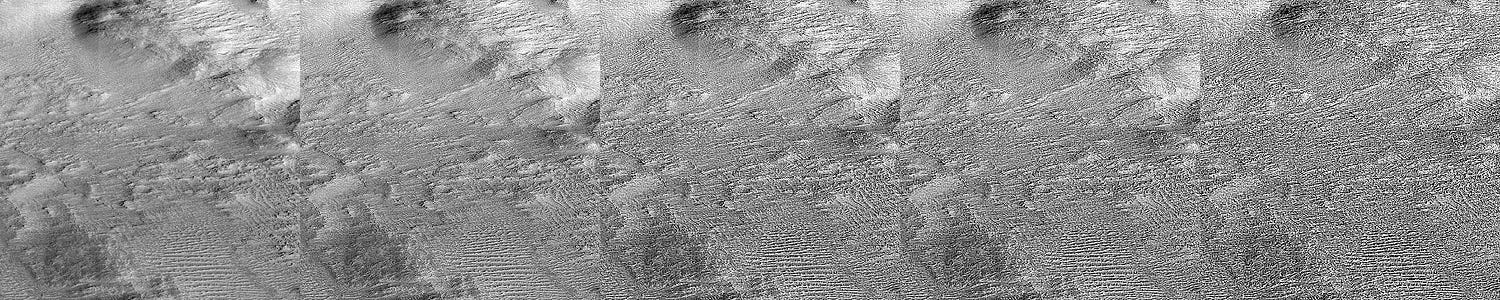

Level 1 →Level 2→Level 3→Level 4→Level 5

I have applied five levels of sharpening in my logic

From the example above

Level 1: More clarity on the image feature

Level 2: Better resolution that than level 1

Level 3: Started distorting the Image

Level 4: Destroyed a lot of features in the original image

Level 5: Almost all features are gone

Summary: Original<level 1<Level2 ≤ Level3 >Level 4 > Level 5

Note: From here onward we will use level2 sharpening for all the images

Thresholding

Thresholding is the simplest operation in Image processing. The main idea is if a pixel value is above a certain threshold value assign it a particular value(say white) else assign it another value(say black).

Note: Thresholding only takes grayscale images as input.

Why Thresholding?

Thesholding will give as a image where regions of the image will be highlighted white and others parts as black that means the regions where there are objects will be white blob and rest will just become black. This gives us an clarity of presence of objects in the image.

Here I had designed the system for 2 types of threshold:

1. Binary inverse threshold: Before Binary inverse threshold lets understand the concept of a simple binary threshold, there are three parameters (source, threshold value, max value). If the source pixel value is greater than the threshold value then assign it max Value.

if src(x,y) > thresh dst(x,y) = maxValue else dst(x,y) = 0

The binary inverse is just the opposite of the Binary threshold if the source image pixel is greater than the threshold assigns it to zero else assign its max value.

if src(x,y) > thresh dst(x,y) = 0 else dst(x,y) = maxValue

2. Zero Threshold: This method of threshold assigns source pixel to destination pixel if its greater than the threshold value else assigns zero.

if src(x,y) > thresh dst(x,y) = src(x,y) else dst(x,y) = 0

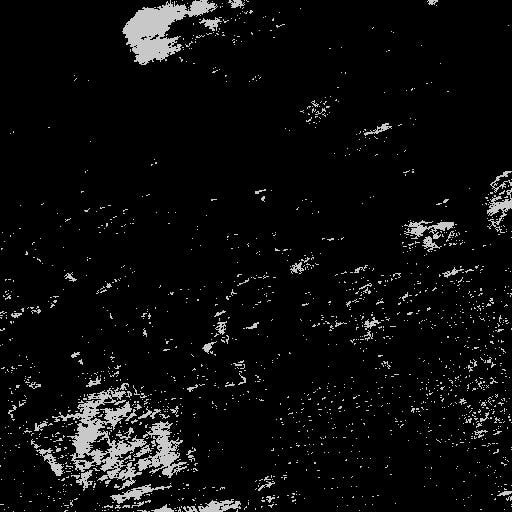

Binary Inverse Thresholding

ToZero thresholding

Comparing the two the Binary Inverse thresholding highlighted the Saturated areas and areas having some information within it be it small hills or creators.

ToZero thresholding Darkened those same regions.

Contour Detection /Shape detection

Contours can be explained simply as a curve joining all the continuous points (along the boundary), having the same color or intensity. The contours are a useful tool for shape analysis and object detection and recognition.

** Note: We are going to use rectangular Contour detection for this.

The simple idea behind this is pixels with higher intensity forming a cluster can be identified. These Clusters are our regions of interests (The anomalies or objects in the image)

This is a two-step process:

Find Contours: this can be achieved by a simple function

cv2.findContours(ThresholdedImage,cv2.RETR_TREE,cv2.CHAIN_APPROX_NONE).

This will actually return coordinates for the detected points

Find the rectangular area bounding them: This can also be achived simply by using opencv

cv2.boundingRect(c)

where c is the contours we got ,this will return us width,height, x -coordinate and y-coordinate

This sounds familiar, right? this is exactly what is needed for algorithms like Yolo what they need is simply <xmax,xmin,ymax,ymin> and we have got those coordinates.

Contour Detection

The above image looks clumsy, So what I finally did was to set up some parameters, like max dimension, max-width, min-width, max-height, min-height. This will limit the number of objects we get in the image.

After setting up the parameters.

As we can see if we set the parameters right we can limit the number of objects. For me, it was MaxDim that is width*height >1000 and or Min Height > 100 etc.

The link to the code is given here.

You might also like